In previous posts, I have explained Dynamic Memory and Non-Uniform Memory Access (NUMA). Both are memory optimization features of Hyper-V that have different advantages. Most people are not fully aware of those positive effects, and they are probably not aware that both features are mutually exclusive. In this article, we’ll do a quick refresh on the two features, and then explain why you would choose one over the other.

Virtual NUMA

Put very simply, Non-Uniform Memory Access (NUMA) is how hypervisors, operating systems, and some applications deal with the processor and memory design of a physical machine’s motherboard. The typical machine that we see in virtualization has two processors (sockets with multiple cores) and a number of memory DIMMs.

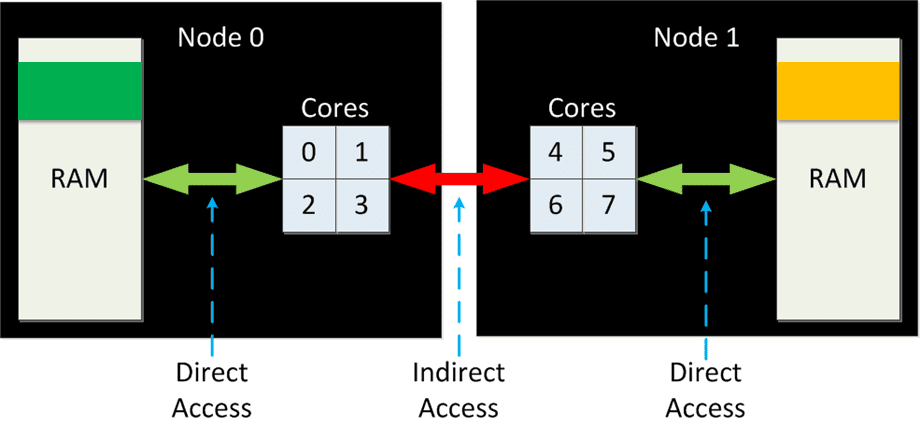

In this server, each processor has a direct bus connection to half of the memory, where a machine with four sockets will have access to less. This is known as a NUMA node. So there are two NUMA nodes in this machine with two processors, each node having half of the total cores and half of the DIMM slots on the motherboard. This is why OEMs advise you to balance the memory placement on the motherboard. An operating system or hypervisor that’s installed on this physical server will do its best to schedule processes and assign memory within a NUMA node.

Here’s an example. If Hyper-V is running a 2 vCPU virtual machine, the virtual processors will be loaded onto logical processors in a NUMA node, and Hyper-V will do its best to assign memory from this NUMA node.

Staying within the NUMA node, known as alignment, offers the best possible performance for the process in question, but it also has a positive effect on other processes. If a NUMA node is memory constrained, then the hypervisor/OS has no choice but to assign memory from another NUMA node. There’s no direct path to the memory bank of the other NUMA node, so the processes running in the original node must use an indirect and costly path via the processor of the other NUMA node to access the memory; this degrades the performance of the process, such as a virtual machine on a hypervisor, and that of other processes on the server. And this is why Hyper-V always tries to keep virtual machines NUMA aligned, but when needs must be met, Hyper-V will allow virtual machines to span NUMA nodes.

Large-Scale Virtual Machines

If a host has dual Intel Xeon six-core CPUs, then there’s a total of 12 cores and 24 logical processors with Hyper-Threading enabled. Windows Server 2012 allowed Hyper-V virtual machines to run with more than four virtual CPUs (up to 64 depending on the number of logical processors in the host) for the first time. In theory, we can run a virtual machine with 24 vCPUs on that host. That means the virtual machine will span NUMA nodes, because it is running on cores in each NUMA node.

Microsoft created guest-aware NUMA to deal with this scenario. Whether the virtual machine is running Windows Server or Linux, Hyper-V will reveal the physical architecture that the virtual machine is running on to the guest OS. This allows the guest OS and any NUMA-aware applications, such as SQL Server, to schedule processes and assign memory according to the physical NUMA boundaries that the virtual machine is running on. This is what allows Hyper-V virtual machines to scale up and maintain similar levels of performance to their physical alternatives.

Virtual NUMA and Live Migration

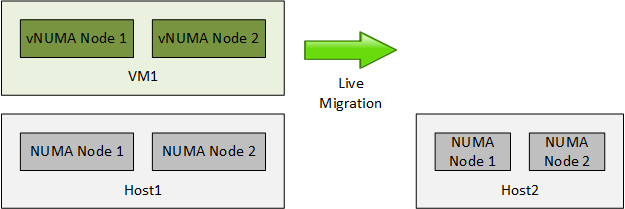

What happens if you live migrate a virtual machine from Host1 with large NUMA nodes to Host2 with smaller NUMA nodes? Guest-aware NUMA only communicates the NUMA architecture to the guest OS at boot up. So if VM1 moves to Host2 successfully, then the virtual machine will split NUMA nodes. This means that the guest NUMA nodes will not be aligned to the physical NUMA nodes of Host2, and performance will degrade.

The best option to prevent splitting is to only include identical hosts in a domain of Live Migration, such as a Hyper-V cluster, which is ideal to maintain processor feature compatibility. But what if that isn’t an option? You can customize the virtual NUMA architecture of a virtual machine to fit within the NUMA node architecture of the smallest host; this will prevent NUMA splitting because the virtual NUMA nodes will fit on all hosts. And there’s a third and more drastic option.

Disable NUMA Spanning

You can disable NUMA spanning in the host settings of a Hyper-V host. This drastic option will prevent any virtual machine from spanning NUMA nodes. There’s a good and a bad side to this.

On the positive side:

- You get predictable performance from the virtual machines on that host

- NUMA spanning won’t degrade the performance of the host

On the downside:

- You lose agility because you cannot live migrate virtual machines to smaller hosts where splitting will occur because NUMA spanning is disabled

- A virtual machine will not be able to boot up if it cannot fit inside a single NUMA node

Personally, I have never seen NUMA disabled on a host, but I guess Microsoft left the setting there for a reason.

Dynamic Memory

As you’ve seen so far, guest-aware NUMA enables the guest OS and NUMA-aware applications running in virtual machines to optimize from where they allocate memory. We have another memory optimization feature in Hyper-V; Dynamic Memory optimizes how much memory is assigned to a virtual machine, measuring pressure in the guest OS, and assigning just enough RAM to service the needs of the machine and leave enough spare capacity for a buffer.

The on-the-fly nature of Dynamic Memory creates a conflict, where guest-aware NUMA is static, only revealing the architecture of the physical NUMA boundaries when the machine boots up. If Dynamic Memory is changing these boundaries, then we have a problem. When you enable Dynamic Memory in a virtual machine you lose guest-aware NUMA.

Choosing Dynamic Memory or Guest-Aware NUMA

So what’s the best feature to pick? I have a simple decision making process for each virtual machine:

- If my virtual machine fits inside a NUMA node, and the guest OS and applications support Dynamic Memory, then I enable Dynamic Memory.

- If my virtual machine resides on more than one NUMA node, but capacity optimization is more important than peak performance, then I enable Dynamic Memory.

- If my virtual machine will span more than one NUMA node, and I need peak performance, then I use static memory, leaving me with guest-aware NUMA.

- For all other virtual machines, I use static memory, leaving me with guest-aware NUMA.

So there you have it! There’s lots of hows and whys, but the entire thing can be boiled down to a simple formula.