We are coming to the end of another calendar year and it’s time for those high-in-comedic-content prediction posts from industry analysts to make an appearance. I am still waiting for my PC to be replaced by a VDI VM, but it appears as if that annual prediction is as worthless as the promise that I’d be commuting to work using a jetpack by now. I expect we’ll be swamped in “2014 will be the year of the cloud” prognostications in the coming weeks, so I thought I would focus on what we can expect to be the talking points for what to expect in Hyper-V in the coming 12 months.

Windows Azure Pack

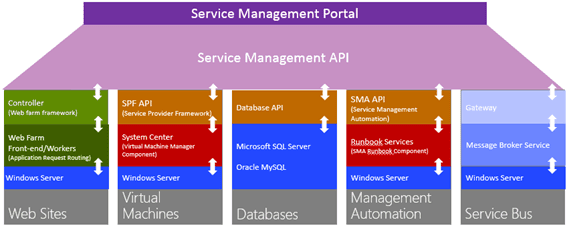

The Windows Azure Pack (WAP) is the front end of Microsoft’s framework for creating a true cloud with self service. This product is based on the portal you use to deploy services on Azure, Microsoft’s public cloud. As such, the first version (known as codename “Katal”) was intended for service providers (hosting companies). The second version of WAP is intended for organizations that are service-centric; in other words, WAP for Windows Server 2012 R2 is designed for organizations that want to deploy either public, private or hybrid (integrating public and private) clouds.

Medium-to-large enterprises and hosting companies have expressed quite a bit on interest in WAP over the past year. I personally believe that larger hosting companies are already invested in open-source alternatives, but the evolution of Hyper-V’s capabilities and the promise of a tightly integrated (System Center), REST-capable cloud is attractive. And 2014 might be a big year in converting traditional IT-centric virtualization farms into self service-capable private clouds in the mid-large enterprise.

This could be the time for infrastructure consultants and architects to learn about WAP, which is a very different beast compared to SCVMM, so there will be a learning curve. Damian Flynn has been writing about WAP here on the Petri IT Knowledgebase, and Dutch consultant Mark van Eijk has been documenting the product (in English) from his experience on the Hyper-V.nu site.

Hyper-V Network Virtualization

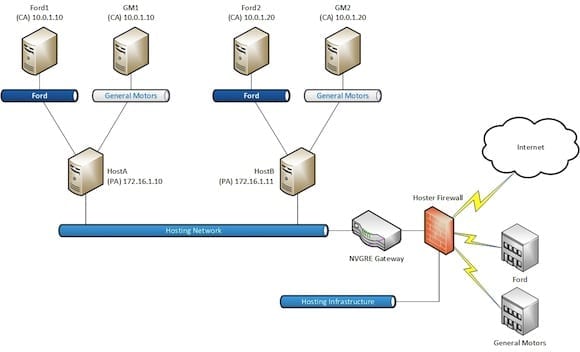

I previously wrote a Petri IT Knowledgebase article to introduce Hyper-V Network Virtualization (HNV, also known as Windows Network Virtualization or WNV). HNV does to networks what machine virtualization does to computers. Machine virtualization creates software-defined machines that simulate physical machines. HNV uses software-defined networking, based on a protocol called NVGRE, to simulate networks and VLANs. This accomplishes a number of things.

- Enables self-service deployment of networks

- Easy isolation of tenants (customers) on a cloud without touching the firewall

- Allows networks to deploy up to 16 million virtual subnets, which is more scalable than the maximum 4,096 VLANs

- Reduces administrative load

- Allows for overlapping IP subnets thanks to the network abstraction provided by NVGRE

HNV might have been introduced in Windows Server 2012 (WS2012), but the ecosystem was not ready. NVGRE appliances (physical or virtual) were required for NAT (internet), NVGRE gateway (physical to virtual routing), and VPN (hybrid networking). Only one manufacturer released an NVGRE gateway appliance, which was very expensive.

Windows Server 2012 R2 (WS2012 R2) came with a number of enhancements that make HNV a realistic solution.

- Virtual NVGRE gateway appliance: You can deploy guest clusters of WS2012 R2 VMs onto a small Hyper-V cluster in the edge network to bridge virtual and physical networks.

- Extension compatibility: Virtual switch extensions such as the Cisco Nexus 1000V and 5nine Security for Hyper-V are compatible with Microsoft’s SDN, unlike other less mature offerings.

- Address learning: Tenants can supply their own IP addresses in their virtual subnets, either statically or via DHCP. And HNV will learn those addresses.

- NVGRE offload: The processing of NVGRE could add unwanted pressure on host processors (though most are quite underutilized). There is an option to use a NIC that is capable of NVGRE offload to alleviate this pressure.

- Extended port ACLs: You can create measurement and isolation rules based on destination/source address or port to implement finer grained isolation within guest networks.

Combined with the necessary System Center Virtual Machine Manager (SCVMM) 2012 R2, HNV is ready for real-world deployments. There is much to learn, and fellow MVPs Kristian Nese and Flemming Riis have published a whitepaper on how to deploy Hyper-V Network virtualization, Microsoft’s software-defined network (SDN), using Windows Server and System Center 2012 R2.

Scale-Out File Server (SoFS)

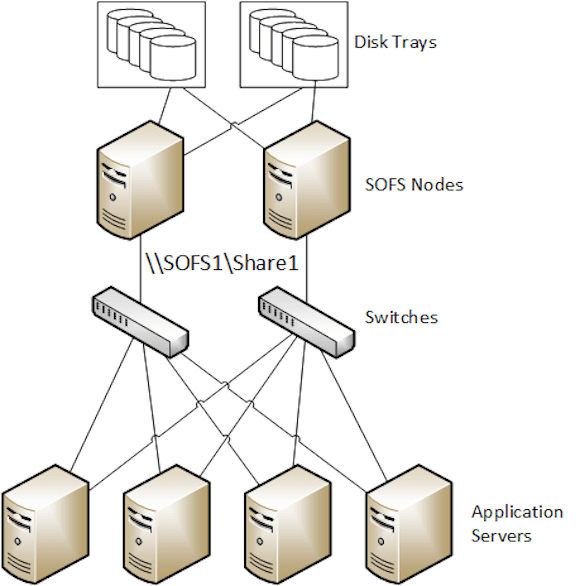

When I have presented on WS2012 R2 during this launch season, one of the things that has grabbed people’s attention is the alternative storage connectivity that the Scale-Out File Server (SOFS) offers. This is a subject that I have invested a lot of my time in because SoFS deployments offer a software-defined alternative to traditional SAN LUNs. When combined with Storage Spaces, the SOFS can even offer a more economical alternative to the SAN, meaning for some that SOFS can be another tier of storage, or for others, SOFS can replace the SAN completely. In fact, you can use a multi-brick deployment to abstract multiple SANs and Storage Spaces deployments behind the namespace of a single SOFS cluster!

While SOFS was possible with WS2012, there was a shortage of knowledge, experience, documentation, and even hardware if you were using Storage Spaces. The list of supported hardware for Storage Spaces is growing – please use only supported hardware for predictable results and performance! Between various Microsoft blogs, particularly Jose Barreto, and community members such as myself, a lot of documentation has appeared in the last year.

Storage is critical to virtualization. Software-defined storage is critical to self-service clouds. The simplicity, low costs, and networking performance offered by a well-designed/implemented SOFS are too attractive to ignore, so we should expect interest in this architecture to increase in 2014.

RDMA Networking

Remote Direct Memory Access (RDMA) can be considered as a way to transport data from one the RAM of one server to another server. This offloads the transfer process from the traditional networking stack, and it reduces processor load and increases throughput. And this is why Microsoft created SMB Direct to leverage RDMA capable networks when they were designing SMB 3.0 as an enterprise-class data protocol to compete with incumbents such as iSCSI and fiber channel.

While you do not need RDMA to implement a SoFS, it sure does improve the performance of the solution, especially if you are going to have lots of Hyper-V hosts/clusters using a relatively small number of SOFS nodes to store their virtual machines. This will improve the performance of the VMs’ storage, but it will also increase the number of SMB client activity that each SoFS node can handle by reducing the processor load caused.

Another perk of investing in SMB 3.0 networking is that you can converge your cluster and Live Migration networks into this investment. Redirected IO uses SMB 3.0, and therefore can be much faster with SMB Multichannel (two or more networks) and SMB Direct. And in WS2012 R2, we can use SMB 3.0 for Live Migration. Imagine 50 GB RAM VMs moving from one host to another in 30 seconds (or less!) via Live Migration. That’s faster than a 4 GB RAM VM is currently migrating over a 1 GbE network.

The benefits are:

- Better consolidation and performance for storage, far more than iSCSI or fiber channel can offer

- Reduced impact (to almost unnoticeable) of redirected IO when it is required, such as SoFS using mirrored Storage Spaces

- Crazy-fast Live Migration so that load balancing kicks in before users have an opportunity to notice, or proactive measures such as host maintenance are faster, or recovering from loss of protected networks has less impact on services.

V2V Migrations

The Hyper-V community is abuzz with conversations of company X dumping alternatives because the Hyper-V and System Center package is ready, more capable for their requirements, and much cheaper. In my own conversations with customers around Europe, WS2012 was the tipping point. WS2012 R2 adds so much more, particularly for those precious public cloud hosting companies and Fortune 1000 enterprises.

This might be my “year of VDI” albatross in the making, but I think that 2014 might be the year when Microsoft’s marketing gets to crow about large customers making the jump.